Running the sample code for ai cast

Get sample code for ai cast

Sample code found be at here.

$ git clone https://github.com/Idein/actcast-app-examples.gitRunning the sample code for ai cast

Set up the application development environment as described in the Actcast Documents : App Development Tutorial

Go to the imagenet-classification-for-aicast directory of the sample code and execute the following command.

$ makeWe will use ai cast, which has been set up with the contents described in the Actcast Documents : Test Actcast application on a local device , to confirm the operation of the sample code.

Sample Code Supplementary Information

The sample code calls the shared library from Python.An overview of each file is provided below.

app/resnet_v1_18.hef

Below is an example of how to convert a resnet_v1_18.onnx to a hef file (binary file loaded into Hailo).

For more information on how to convert other models and for more detailed information, please refer to the documentation distributed in the Hailo Developer Zone ( Hailo DataflowCompiler User Guide ). To download the software, you must create an account at https://hailo.ai/ and apply for Developer Zone Access.

Contact Hailo via Contact Customer Support for errors during the model conversion.

Install the Dataflow Compiler as described in the Hailo documentation ( Hailo DataflowCompiler User Guide ).

Conversion of onnx to har

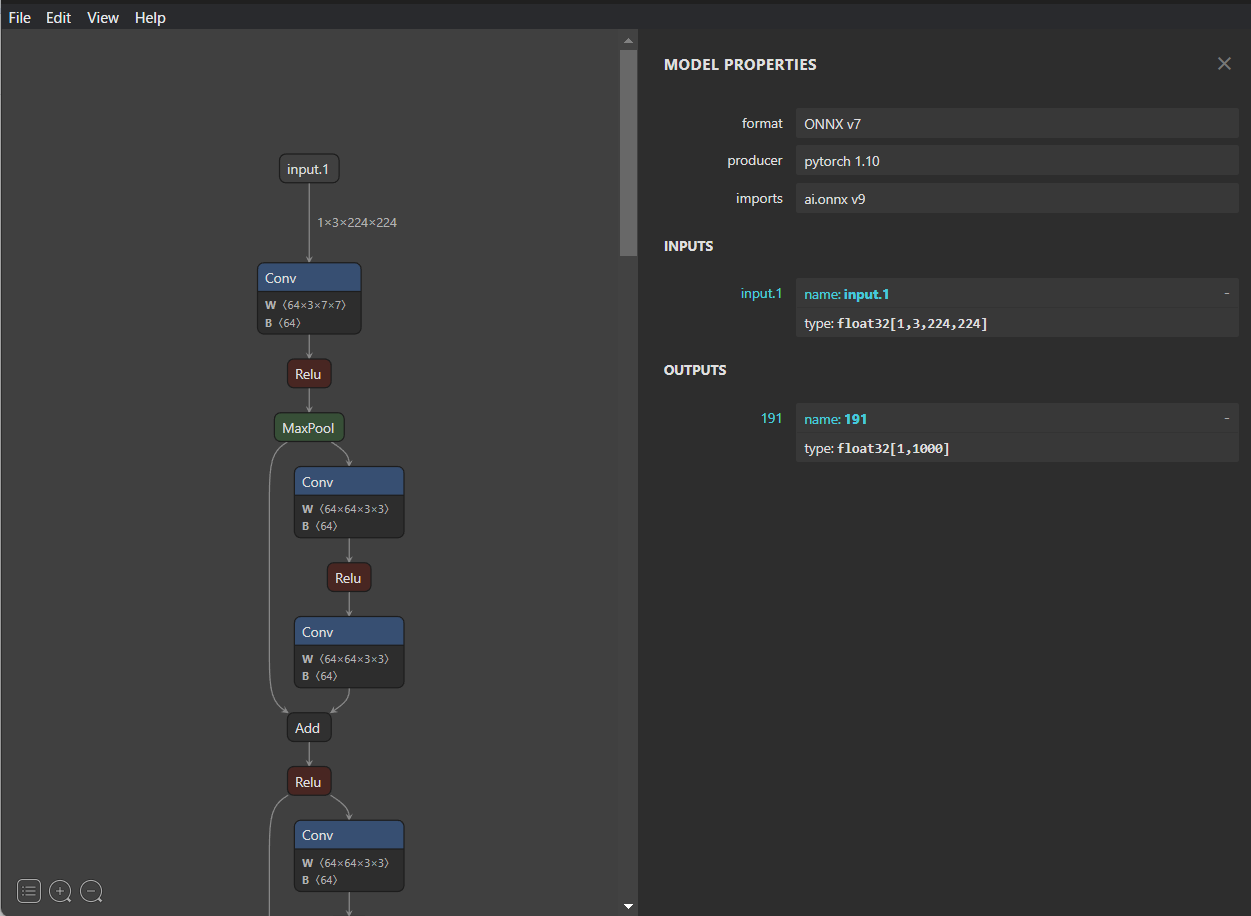

We checked inputs and outputs in Netron specified input.1 for start-node-names and 191 for end-node-names.

$ hailo parser onnx resnet_v1_18.onnx --start-node-names input.1 --end-node-names 191 --hw-arch hailo8

Prepare Allocator Script (ALLS) files

The Allocator Script (or Model Script) is a file that describes instructions on allocating (Allocate) the resources of the Hailo chip. In addition to running the Neural Network, the Hailo chip can perform pre-processing, such as resizing, normalization, and RGB->YUV conversion on the input. To achieve such processing on Hailo, writing these instructions in the ALLS file is necessary.

normalization1 = normalization([123.675, 116.28, 103.53], [58.395, 57.12, 57.375])

model_optimization_config(calibration, batch_size=1, calibset_size=10000)Prepare Calibration Dataset

Quantization from 32-bit float f to 8-bit (or 4-bit) int i is performed by the following formula.

f = scale * (i - bias)When performing the transformation, we must pass sample input data ( Calibration Dataset ) to determine the scale and bias.

Create a Calibration Dataset (calib_set.npy) using imagenette. Since ResNet-V1-18 has an input size of 224x224 RGB images, we create array of (N, 224, 224, 3).

import numpy as np

import glob

from PIL import Image

images = []

for f in glob.glob('imagenette2-320/**/*.JPEG', recursive=True):

image = Image.open(f).convert('RGB').resize((224, 224))

images.append(np.asarray(image))

np.save('calib_set.npy', np.stack(images, axis=0))Perform model quantisation

Quantize the model by specifying the Allocator Script ( resnet_v1_18.alls ), and Calibration Dataset ( calib_set.npy ) as follows. The quantized model is saved as resnet_v1_18_quantized.har.

$ hailo optimize resnet_v1_18.har --calib-set-path calib_set.npy --model-script resnet_v1_18.alls --hw-arch hailo8Compilation to hef

Generate a binary file (.hef ) to be loaded into Hailo by doing the following.

$ hailo compiler resnet_v1_18_quantized.har --hw-arch hailo8Dockerfile

Create a Docker image to compile your model for Hailo.

FROM idein/cross-rpi:armv6-slim

ENV SYSROOT /home/idein/x-tools/armv6-rpi-linux-gnueabihf/armv6-rpi-linux-gnueabihf/sysroot

ADD root.tar $SYSROOT

RUN sudo rm $SYSROOT/usr/lib/libhailort.so

RUN sudo ln -s $SYSROOT/usr/lib/libhailort.so.4.10.0 $SYSROOT/usr/lib/libhailort.sosrc/resnet_v1_18.c

#include <assert.h>

#include "hailo/hailort.h"

#define MAX_EDGE_LAYERS (16)

#define HEF_FILE ("resnet_v1_18.hef")

static hailo_vdevice vdevice = NULL;

static hailo_hef hef = NULL;

static hailo_configure_params_t config_params = {0};

static hailo_configured_network_group network_group = NULL;

static size_t network_group_size = 1;

static hailo_input_vstream_params_by_name_t input_vstream_params[MAX_EDGE_LAYERS] = {0};

static hailo_output_vstream_params_by_name_t output_vstream_params[MAX_EDGE_LAYERS] = {0};

static hailo_activated_network_group activated_network_group = NULL;

static size_t vstreams_infos_size = MAX_EDGE_LAYERS;

static hailo_vstream_info_t vstreams_infos[MAX_EDGE_LAYERS] = {0};

static hailo_input_vstream input_vstreams[MAX_EDGE_LAYERS] = {NULL};

static hailo_output_vstream output_vstreams[MAX_EDGE_LAYERS] = {NULL};

static size_t input_vstreams_size = MAX_EDGE_LAYERS;

static size_t output_vstreams_size = MAX_EDGE_LAYERS;

int infer(unsigned char *input0, float *out0)

{

unsigned char q_out0[1000];

hailo_status status = HAILO_UNINITIALIZED;

/* Feed Data */

status = hailo_vstream_write_raw_buffer(input_vstreams[0], input0, 224 * 224 * 3);

assert(status == HAILO_SUCCESS);

status = hailo_flush_input_vstream(input_vstreams[0]);

assert(status == HAILO_SUCCESS);

status = hailo_vstream_read_raw_buffer(output_vstreams[0], q_out0, 1000);

assert(status == HAILO_SUCCESS);

/* dequantize */

float scale = vstreams_infos[1].quant_info.qp_scale;

float zp = vstreams_infos[1].quant_info.qp_zp;

for (int i = 0; i < 1000; i++)

out0[i] = scale * (q_out0[i] - zp);

return status;

}

int init()

{

hailo_status status = HAILO_UNINITIALIZED;

status = hailo_create_vdevice(NULL, &vdevice);

assert(status == HAILO_SUCCESS);

status = hailo_create_hef_file(&hef, HEF_FILE);

assert(status == HAILO_SUCCESS);

status = hailo_init_configure_params(hef, HAILO_STREAM_INTERFACE_PCIE, &config_params);

assert(status == HAILO_SUCCESS);

status = hailo_configure_vdevice(vdevice, hef, &config_params, &network_group, &network_group_size);

assert(status == HAILO_SUCCESS);

status = hailo_make_input_vstream_params(network_group, true, HAILO_FORMAT_TYPE_AUTO,

input_vstream_params, &input_vstreams_size);

assert(status == HAILO_SUCCESS);

status = hailo_make_output_vstream_params(network_group, true, HAILO_FORMAT_TYPE_AUTO,

output_vstream_params, &output_vstreams_size);

assert(status == HAILO_SUCCESS);

status = hailo_hef_get_all_vstream_infos(hef, NULL, vstreams_infos, &vstreams_infos_size);

assert(status == HAILO_SUCCESS);

status = hailo_create_input_vstreams(network_group, input_vstream_params, input_vstreams_size, input_vstreams);

assert(status == HAILO_SUCCESS);

status = hailo_create_output_vstreams(network_group, output_vstream_params, output_vstreams_size, output_vstreams);

assert(status == HAILO_SUCCESS);

status = hailo_activate_network_group(network_group, NULL, &activated_network_group);

assert(status == HAILO_SUCCESS);

return status;

}

void destroy() {

(void) hailo_deactivate_network_group(activated_network_group);

(void) hailo_release_output_vstreams(output_vstreams, output_vstreams_size);

(void) hailo_release_input_vstreams(input_vstreams, input_vstreams_size);

(void) hailo_release_hef(hef);

(void) hailo_release_vdevice(vdevice);

}Makefile

SOURCE=resnet_v1_18.c

TARGET=libresnet_v1_18.so

all: app/$(TARGET)

app/$(TARGET): src/$(SOURCE)

docker build -t cross-rpi .

docker run -it --rm -d --name cross cross-rpi /bin/bash

docker cp src cross:/home/idein/src

docker exec -it cross armv6-rpi-linux-gnueabihf-gcc -W -Wall -Wextra -Werror -O2 -pipe -fPIC -std=c99 -I src src/$(SOURCE) -mcpu=arm1176jzf-s -mfpu=vfp -mfloat-abi=hard -shared -o $(TARGET) -lhailort -lpthread

docker cp cross:/home/idein/$(TARGET) app/$(TARGET)

docker stop cross

root.tar

root.tar is a set of files for accessing Hailo from the Actcast application.

Use the actdk functionality to include the files in the app’s docker image if there is a file named root.tar in the app’s root directory.

app/model.py

from ctypes import cdll

import numpy as np

class Model:

def __init__(self):

self.lib = cdll.LoadLibrary('./libresnet_v1_18.so')

self.lib.init()

def __del__(self):

self.lib.destroy()

def infer(self, image):

out = np.zeros(1000, dtype=np.float32)

self.lib.infer(

image.ctypes.data,

out.ctypes.data

)

return out,app/main (Excerpts)

#!/usr/bin/python3

import argparse

import subprocess

import time

import os

from PIL import Image, ImageDraw, ImageFont

import actfw_core

from actfw_core.task import Pipe, Consumer

from actfw_core.capture import V4LCameraCapture

import actfw_raspberrypi

from actfw_raspberrypi.vc4 import Display

import numpy as np

from model import Model

from configuration import *

(CAPTURE_WIDTH, CAPTURE_HEIGHT) = (224, 224) # capture image size

(DISPLAY_WIDTH, DISPLAY_HEIGHT) = (640, 480) # display area size

class Classifier(Pipe):

def __init__(self, settings, capture_size):

super(Classifier, self).__init__()

self.model = Model()

self.settings = settings

self.capture_size = capture_size

def proc(self, frame):

captured_image = Image.frombuffer('RGB', self.capture_size, frame.getvalue(), 'raw', 'RGB')

if self.settings['resize_method'] == 'crop':

if self.capture_size != (CAPTURE_WIDTH, CAPTURE_HEIGHT):

w, h = self.capture_size

captured_image = captured_image.crop((w // 2 - CAPTURE_WIDTH // 2, h // 2 - CAPTURE_HEIGHT // 2, w // 2 + CAPTURE_WIDTH // 2, h // 2 + CAPTURE_HEIGHT // 2))

else:

w, h = self.capture_size

l = min(w, h)

captured_image = captured_image.crop((w // 2 - l // 2, h // 2 - l // 2, w // 2 + l // 2, h // 2 + l // 2))

captured_image = captured_image.resize((CAPTURE_WIDTH, CAPTURE_HEIGHT))

input_image = np.asarray(captured_image)

probs, = self.model.infer(input_image)

return (captured_image, probs)Next: Register ai cast on Actcast

Previous: Setup Actsim on ai cast